ロンドン大学で MSc Computer Science: Computer systems モジュールを履修中。

講義内容に関して記録した個人的なスタディノートです。

全 12 週のうち 6〜12 週目の内容を記録します。(6 週目開始:2025 年 2 月 10 日 / 12 週目終了:2025 年 3 月 31 日)

Week 6: Processes and threads #

概要

This week introduces you to operating systems, which are software packages that coordinate a computer’s internal activities as well as oversee its communication with the outside world. It is a computer’s operating system that transforms the computer hardware into a useful tool. The goal is to understand what operating systems do and how they do it.

キーコンセプト

This topic includes:

- components of an operating system

- the concept of a process

- handling competition between processes

- attacks from outside and within

- implementation

- scheduling

- inter-process communication

- synchronisation.

レクチャーリスト

- Process Description and Control

- Lecture 1: Introduction to processes and threads

- Lecture 2: What is a process?

- Lecture 3: Process states

- Lecture 4: Process description

- Lecture 5: Process control

- Lecture 6: Execution of the operating system

- Threads

- Lecture 7: Processes and threads

- Lecture 8: Types of threads

- Lecture 9: Multicore and multithreading

- Scheduling

- Lecture 10: Types of processor scheduling

- Lecture 11: Scheduling algorithms

Lecture 1: Introduction to processes and threads #

今週の講義で学習することの全体像の説明。

Lecture 2: What is a process? #

Processes and Threads

- The operating system manages the execution of applications by allocating necessary resources and switching between them using the processor.

- This allows multiple applications to appear to run simultaneously.

What is a Process

- A process includes a portion of program code and related data being executed by the processor.

- Processes make up the basic unit of execution in most operating systems.

Process Control Block (PCB)

- Each process is tracked using a data structure called a process control block.

- The PCB stores key attributes, including:

- Unique process identifier

- Current state (e.g., running, waiting)

- Priority level for scheduling

- Program counter (address of the next instruction)

- Memory pointers for code and data

- Context data (register values)

- I/O information (e.g., device requests, file usage)

- Accounting data (start time, total execution time)

Managing Processes

- The PCB allows a process to be paused, resumed, or terminated.

- When a process is interrupted, its context (program counter and registers) is saved.

- Another process’s context is then loaded to allow it to run.

- This mechanism supports multi-processing by allowing multiple processes to share the CPU over time.

Lecture 3: Process states #

Process States

- The dispatcher is responsible for switching between processes in memory and sending them to the CPU.

- Each process has its program counter and context data saved when switched out and restored when resumed.

Process Execution and Memory Layout

- Processes occupy varying sizes of memory depending on their instructions.

- Dispatcher uses the program counter to track the current instruction and manages transitions between processes.

Process State Models

- There are two common models for describing process states: the two-state model and the five-state model.

- These are alternative ways to represent the lifecycle of a process.

Two-State Model

- A simplified model where a process is either running or not running.

- Not running includes any process that is waiting for CPU time or blocked.

- Easy to implement but lacks detail and efficiency for complex scheduling.

Five-State Model

- A more detailed and practical model used in modern operating systems.

- Includes the following states:

- New: the process is being created.

- Ready: the process is waiting for CPU time.

- Running: the process is currently being executed.

- Blocked: the process is waiting for an event (e.g., I/O completion).

- Exit: the process has finished or terminated.

This model provides better scheduling and process management by distinguishing between ready and blocked processes.

Lecture 4: Process description #

Process Description

- The operating system is responsible for scheduling processes, allocating resources, and handling I/O requests.

- To manage processes, the OS uses various control structures that store and track important process information.

Types of Control Structures

- Memory tables: Track memory allocation for each process, including main and virtual memory.

- I/O tables: Track the status and usage of input/output devices by processes.

- File tables: Store file status, location, and whether a file is being read or written.

- Process tables: Contain information about each process such as location, state, and resources.

Process Image

- A process is stored as a process image, which includes:

- Program code

- Process data

- Process control block (PCB)

- Stack (used for function parameters and system call data)

Process Control Block (PCB)

- One of the most important structures used to manage processes.

- Contains:

- Unique process identifier

- Current state

- Program counter

- Register data

- I/O and file information

- Execution control and interrupt data

Execution and Memory Management

- Part of the process image is loaded into main memory; the rest remains in virtual memory until needed.

- The OS uses the PCB to switch processes in and out of the CPU, ensuring proper execution order.

Key Points

- Control structures allow the OS to locate, manage, and schedule processes efficiently.

- The PCB and related tables enable the OS to track the current state and resource usage of each process.

Lecture 5: Process control #

Process Control

- Modern operating systems manage processes using two modes: privileged mode (kernel mode) and user mode.

- Privileged mode allows execution of sensitive instructions like memory management and I/O control.

- User mode restricts access and is used for running user-level applications.

Operating System Responsibilities

- The OS is responsible for process management, memory management, I/O device management, and support functions.

Process Creation

- Each new process receives a unique identifier.

- The process is added to the process table.

- Memory is allocated for the process image and its process control block (PCB).

- The PCB is initialized with default values, including the process state (e.g., ready or ready suspend).

- Resources such as files or input devices may be allocated upon request or inherited from a parent process.

Scheduling and Switching

- The OS uses scheduling queues (often linked lists) to manage process states.

- A process switch occurs when the OS regains control, possibly due to:

- End of time slice

- Interrupt

- I/O request or completion

- Memory fault (data not found in main memory)

Memory Management

- The OS maintains memory allocation for both main and virtual memory.

- It manages movement of memory blocks (pages and segments) between main memory and secondary storage.

I/O Device Management

- The OS manages multiple I/O devices.

- Tasks include buffer handling and resource allocation to processes requesting access.

- Devices can interrupt each other depending on usage demands.

Support Functions

- Small utility routines used by the OS for:

- Handling interrupts

- Updating system tables

- Monitoring system performance

- Assisting components like the dispatcher

Lecture 6: Execution of the operating system #

Execution of the Operating System

- The operating system is not a single monolithic entity but a collection of modular programs responsible for specific tasks.

- The processor determines when control is passed to the operating system.

Types of Operating System Execution Models

- There are three main models:

- Non-process kernel

- Execution within user processes

- Process-oriented (process-based) operating systems

Non-Process Kernel

- The OS operates separately from user programs.

- Only user applications are treated as processes.

- OS runs in privileged mode with its own memory region and system stack.

- The kernel restores process context when switching back to user mode.

Execution Within User Processes

- The OS is executed within the address space of user processes.

- Each process image includes:

- Program and data region

- User stack

- Process control block

- Special kernel stack for system functions

- OS routines are executed by the user through interaction.

- Code sharing allows OS routines to be present in multiple user processes.

Process-Oriented Operating Systems

- Kernel functions are implemented as separate processes.

- Enables modular design, easier development, and debugging.

- Well-suited for multi-core or multiprocessing systems.

- Promotes performance improvement through parallel execution.

Lecture 7: Processes and threads #

Processes and Threads

- A process provides the resources for executing program code and includes elements such as code, open handles, unique ID, priority class, and more.

- A thread is a schedulable unit within a process. Every process starts with a primary thread and can create additional threads.

Thread Characteristics

- Threads within the same process share:

- Virtual address space

- System resources

- Exception handlers

- Each thread has its own:

- Thread ID

- Scheduling priority

- Context (registers, stack)

- Thread control block

Thread Creation and Scheduling

- Threads are placed in a ready queue when created.

- On multiprocessor systems, multiple threads can execute simultaneously.

- Single-threading involves one execution path per process.

- Multithreading enables multiple concurrent execution paths within one process.

Advantages of Threads over Processes

- Faster to create and terminate than processes.

- Lower overhead when switching between threads within the same process.

- Threads communicate more efficiently than processes because they share memory and resources.

Thread Model Structure

- Single-threaded model: One thread with shared stack and control structures.

- Multi-threaded model: Shared address space with separate stacks and thread control blocks per thread.

Thread Pool

- A thread pool is a collection of worker threads used to handle tasks asynchronously.

- Reduces the need to frequently create/destroy threads.

- Thread states must be tracked within the pool.

Thread States

- Core states include:

- Running: currently executing

- Ready: waiting to be scheduled

- Blocked: waiting for an event (e.g., I/O)

- Unblocked: event complete, moved to ready

- Finished: task complete, resources deallocated

Synchronization and Resource Sharing

- Threads share address space and resources, so changes by one thread affect others.

- Synchronization is required to prevent data conflicts or deadlocks.

- Improper synchronization may lead to blocked threads or race conditions.

Lecture 8: Types of threads #

Types of Threads

- There are two main types of threads:

- User-level threads (ULT)

- Kernel-level threads (KLT)

User-Level Threads (ULT)

- Managed entirely in user space by the application.

- The kernel has no awareness or involvement in thread management.

- Threads are created and managed using thread libraries and utilities.

- Thread switching does not require kernel involvement, reducing overhead.

- Scheduling can be customized by the application (e.g., round-robin or priority-based).

- No kernel updates are required to support ULT.

Advantages of ULT

- Fast context switching since it avoids kernel mode.

- Greater flexibility in scheduling policies.

- No need for system-level support from the kernel.

Disadvantages of ULT

- A system call from one thread blocks all threads in the same process.

- ULTs cannot take full advantage of multiprocessing because the OS sees only one process.

Kernel-Level Threads (KLT)

- Managed by the operating system or kernel.

- Each thread is known and scheduled independently by the kernel.

- Allows true concurrency; multiple threads can run in parallel on multiple processors.

- If one thread is blocked, others in the same process can continue executing.

- Examples include Microsoft Windows.

Advantages of KLT

- Full support for parallel execution on multiprocessor systems.

- Better responsiveness when threads are blocked.

Disadvantages of KLT

- Thread switching requires a mode switch to the kernel, adding overhead.

- Slower than ULT in terms of creation and context switching.

Combined Approach

- Uses both user-level and kernel-level threads.

- User threads are mapped to kernel threads.

- A blocked user thread does not block the entire process.

- Provides the benefits of both models.

- Example: Solaris operating system.

Lecture 9: Multicore and multithreading #

Multicore and Multithreading Systems

- Multicore systems can enhance performance for applications with many threads, but require careful design to avoid performance issues.

- Concurrent processing handles one thread at a time but switches quickly between them.

- Multiprocessing allows true parallel execution of multiple threads or processes simultaneously.

Thread-Based Approaches in Applications

- Multiprocess applications (e.g., Oracle) use multiple single-threaded processes.

- Java applications support multithreading at the core via the Java Virtual Machine.

- Multi-instance applications (e.g., video editors) can run multiple instances in parallel on multicore systems.

- Multithreading allows multiple instruction streams to run in parallel within a single process, reducing overhead.

Multithreading Characteristics

- Threads in the same process share address space and resources, enabling efficient communication.

- Threads in different processes can use shared memory for communication.

- Thread switching is faster than process switching, but requires synchronization to avoid race conditions and deadlocks.

Windows and Multicore Multithreading

- Windows processes are treated as objects, each with one or more threads.

- Processes and threads in Windows have built-in synchronization capabilities.

- Each process has a security access token, identifying the user and controlling access rights.

- Threads in different processes can run concurrently; threads in the same process can run in parallel on multicore systems.

- Windows uses object-oriented design to manage process creation and execution.

- When a new process is created, it uses a template to define its structure and interface.

- Each process has at least one thread, which can spawn additional threads.

Developer Considerations

- OS handles many aspects of multithreading and multiprocessing, but developers must design applications carefully.

- Efficient use of multicore systems depends on how threads and processes are managed within the application.

Lecture 10: Types of processor scheduling #

Processor Scheduling Overview

- Processor scheduling assigns time slots to processes to maximize efficiency and throughput.

- In a multiprogramming environment, processes alternate between CPU execution and waiting for I/O.

- Scheduling determines which process runs next and manages limited CPU resources effectively.

Types of Scheduling

- Scheduling is categorized by time scale:

- Short-term scheduling

- Medium-term scheduling

- Long-term scheduling

- Input/Output (I/O) scheduling

Short-Term Scheduling

- Manages which process gets the CPU next.

- Activated on events like:

- Process blocking

- Interrupts

- System calls

- Runs frequently and directly affects CPU responsiveness.

Medium-Term Scheduling

- Manages which processes stay in main memory and which are swapped out.

- Helps control the degree of multiprogramming.

- Decides whether to suspend or resume processes from memory.

Long-Term Scheduling

- Determines which programs are admitted as processes into the system.

- Controls the rate at which new processes are created.

- Regulates the overall level of multiprogramming.

I/O Scheduling

- Determines the order in which I/O requests are handled.

- Balances access to I/O devices between competing processes.

Lecture 11: Scheduling algorithms #

Overview of Scheduling Algorithms

- Scheduling algorithms determine how CPU time is allocated to processes.

- Two main types:

- Preemptive: allows interruption of a running process.

- Non-preemptive: process runs until completion or waiting state.

Short-Term Scheduling

- Selects which process from the ready queue will run next.

- Operates frequently and directly affects system performance.

Priority Scheduling

- Each process is assigned a priority.

- Higher priority processes are scheduled first.

- Can be preemptive or non-preemptive.

- Risk of starvation for low-priority processes; aging can prevent this.

- Tie-breaking can be done using first-come-first-served.

Common Scheduling Algorithms

- First-Come-First-Served (FCFS)

- Non-preemptive.

- Simple queue based on arrival order.

- Can result in long average waiting times.

- Round Robin

- Preemptive.

- Each process is assigned a fixed time slice (quantum).

- Uses clock interrupts for switching.

- Fair and starvation-free, but high overhead if quantum is too short.

- Shortest Process Next (SPN)

- Non-preemptive.

- Selects the process with the shortest total execution time.

- Efficient but may cause starvation of longer processes.

- Shortest Remaining Time (SRT)

- Preemptive version of SPN.

- Chooses process with the shortest time left.

- Can interrupt current process for a shorter one.

- Highest Response Ratio Next (HRRN)

- Non-preemptive.

- Prioritizes based on a response ratio: (waiting time + service time) / service time.

- Balances short and long processes to avoid starvation.

- Feedback Scheduling

- Preemptive.

- Used when execution time is unknown.

- Uses multiple queues with descending priorities.

- Processes move down queues as they consume CPU time.

Fair-Share Scheduling

- Used in multi-user systems.

- Allocates CPU time based on user-level shares, not just processes.

- Prevents users from monopolizing resources.

- Priority weights can be adjusted per user (e.g., developers vs. managers).

Week 7: Input-output systems and file systems #

概要

In addition to the processor and a set of memory modules, the third key element of a computer system is a set of I/O modules. Each module interfaces to the system bus or central switch and controls one or more peripheral devices. The I/O module contains logic for performing a communication function between the peripheral and the computer bus.

キーコンセプト

This topic includes:

- disks

- files

- caching

- directories

- redundancy

- bus architecture

- interrupt mechanism

- OMA

- device drivers

- disk scheduling algorithms.

レクチャーリスト

- Input-output (O/I) systems

- Lecture 1: Introduction to input-output systems and file systems

- Lecture 2: Input-output systems

- Lecture 3: Input-Output models

- The File system

- Lecture 4: Device management

- Lecture 5: Disk management

- Lecture 6: Disk scheduling policies

- Lecture 7: RAID

- Cache Memory

- Lecture 8: Cache memory

- Lecture 9: Cache placement policies

- Lecture 10: Cache replacement policies

Lecture 1: Introduction to input-output systems and file systems #

今週の講義で学習することの全体像の説明。

Lecture 2: Input-output systems #

- Input-Output Modules are essential components of computer systems, in addition to the processor and memory.

- I/O devices (e.g., monitors, keyboards, mice) cannot connect directly to the system bus due to differing operation methods, data standards, formats, and transfer speeds.

- I/O modules handle communication between external devices and the system bus, manage data transfer rates, and can control multiple devices.

- Monitors and keyboards are traditional input-output devices used for user interaction. The keyboard sends input signals, which are converted into bit patterns (ASCII or Unicode) by a transducer, then transmitted to the I/O module.

- Monitors display character strings, including printable and control characters (e.g., tab, return). Printable characters include letters, numbers, punctuation, and special symbols.

- Control characters influence the formatting of displayed or printed data.

- Disk drives serve as both input and output devices for data storage and retrieval. They are managed by I/O modules that also provide status updates and error detection.

- In hard disk drives, magnetic patterns are converted into bit data through a transducer and device buffer. Disk arms move radially across disk surfaces to read/write data.

- Key takeaway: Each external device has unique characteristics (data format, speed), and I/O modules act as intermediaries to facilitate compatibility without needing hardware changes to the main computer.

- This modular approach allows for easy integration of new hardware without altering the core system.

Lecture 3: Input-Output models #

Functions of I/O Modules

- Control and timing

- Communication with the processor

- Communication with external devices

- Data buffering

- Error detection

Control and Timing

- Coordinates data flow between processor, memory, and devices.

- Decodes processor commands (e.g., read/write disk sectors).

- Manages timing to prevent resource conflicts.

Status Reporting and Addressing

- Reports device status (e.g., ready, busy, error).

- Each I/O device has a unique address for identification.

- Enables proper routing of commands and data.

Data Buffering

- Handles different transfer rates between CPU/memory and devices.

- Buffers data before sending/receiving to/from devices.

- Prevents data loss and improves efficiency.

I/O Module Structure

- Connects system bus to multiple external devices.

- Contains data registers, status registers, and control logic.

- Interfaces with processor via control lines and with devices via device-specific logic.

I/O Operation Techniques

- Programmed I/O: CPU directly controls all I/O operations, leading to inefficiency.

- Interrupt-Driven I/O: CPU initiates I/O and continues execution; interrupted when I/O completes.

- Direct Memory Access (DMA):

- Bypasses CPU by allowing direct data transfer between memory and I/O module.

- CPU is involved only at start and end of transfer.

- Frees CPU for other tasks.

Advanced: Direct Cache Access (DCA)

- Used for high-speed networks (e.g., Ethernet, Wi-Fi).

- Allows I/O devices to load data directly into CPU cache.

- Reduces processor cycles and improves performance.

- Especially useful for handling gigabyte-scale data transfers.

Lecture 4: Device management #

Functions of Device Management

- Notifies applications of resource availability changes.

- Allows adaptation to newly available or unavailable devices.

- Ensures reliable communication between OS, applications, and hardware.

Device Drivers

- Software that controls specific hardware devices.

- Acts as a translator between OS/applications and hardware.

- Hardware-dependent and OS-specific.

- Simplifies high-level programming by abstracting hardware details.

- Interfaces with various devices (e.g., printers, scanners, network cards).

- Loadable drivers (e.g., in Windows/Linux) optimize memory usage.

Driver Identifiers and Development

- Devices are identified by vendor ID and device ID.

- Driver development requires deep knowledge of hardware/OS.

- Logical device drivers are typically written by OS vendors.

- Physical drivers are often developed by hardware manufacturers.

Driver Frameworks

- User Mode Driver Framework:

- Used for message-based devices.

- Crashes do not affect the system.

- Kernel Mode Driver Framework:

- Used for power management, I/O cancellations, plug-and-play.

- Closer to the hardware; system-critical.

Virtual Device Drivers

- Emulate hardware in virtual environments (e.g., cloud services).

- Intercepts hardware calls and routes them within a virtual machine.

- Operates as function calls between virtual and real hardware interfaces.

I/O Software Layer Organization

- User process initiates I/O request.

- Request passes through multiple abstraction layers:

- General I/O functions (e.g., read, write)

- Device-specific drivers and interrupt handlers

- Hardware-specific I/O operations

Buffering in I/O Operations

- Prevents blocking and improves performance in multi-program environments.

- Locks processes in memory during I/O to avoid being swapped out.

Buffering Techniques

- Single Buffering:

- Uses one buffer between memory and user space.

- Simple and effective for sequential access.

- Double Buffering:

- Uses two alternating buffers.

- Enhances speed and smoothness of I/O.

- Circular Buffering:

- Uses multiple buffers in a cycle.

- Effective for continuous data flow.

I/O Organization and File Systems

- Logical I/O:

- Provides a high-level interface (e.g., open, read, write).

- Abstracts device-specific details.

- Device I/O:

- Converts requests into device-specific commands.

- May use buffering for performance.

- Scheduling and Control:

- Manages I/O scheduling, interrupt handling, and status reporting.

File System Components

- Directory Management:

- Maps file names to identifiers using file descriptors or index tables.

- File System Layer:

- Handles file structure and user operations (e.g., read, write).

- Physical Organization:

- Maps logical file access to physical disk addresses.

- Manages secondary storage allocation.

Lecture 5: Disk management #

Functions of Disk Management

- Manages storage on external memory devices (e.g., hard drives, USBs).

- Organizes data into volumes, partitions, and clusters.

- Provides access through logical structures using controllers and firmware.

- Handles fragmentation, partitioning, and file system formats.

Disk Structure and Access

- Disks are made of platters → tracks → sectors (smallest unit, ~512 bytes).

- Clusters consist of multiple sectors; files are stored in clusters.

- Disk controller translates physical geometry into logical structures.

- OS manages files using logical sector numbers.

- File Allocation Table (FAT) tracks used and free clusters.

Performance Metrics

- Seek Time: Time to move disk head to the correct track.

- Rotational Delay: Time for the sector to rotate under the head.

- Access Time = Seek Time + Rotational Delay.

- Disk Cache: Stores recently accessed sectors for faster I/O.

Fragmentation

- Internal: Wasted space due to allocation rules.

- External: Free space scattered in small blocks.

- Data Fragmentation: Large files broken into scattered chunks.

Disk Partitioning

- Divides disk into logical units (partitions).

- Enables multi-OS setups, separate file systems, or backup regions.

- Primary Partition: Referenced in MBR; one active at a time.

- Extended Partition: Contains multiple logical partitions.

- Trade-offs: Better organization but may reduce usable space and performance.

File Systems

- Manage how files are stored and retrieved on storage devices.

- Btrfs: Snapshotting, mirroring, compression.

- Ext4: Large file and volume support (Linux default).

- F2FS: Flash-friendly for mobile/flash memory.

- JFS: Fast mounting and stable.

- NILFS2: Log-structured, minimizes data loss.

- NTFS: Windows default, supports journaling and access control.

- Journaling:

- Records changes before applying them to enable rollback and fault tolerance.

File Storage Types

- Sequential Files:

- Data stored/accessed in order.

- Contains EOF marker or uses directory info to mark end.

- Indexed Files:

- Key-index pairs map to record locations.

- Index stored as separate file for fast access.

- Hash Files:

- Uses hash function to map keys directly to storage buckets.

- Provides fast access without index overhead.

Lecture 6: Disk scheduling policies #

Functions of Disk Scheduling

- Manages the order of disk I/O operations to optimize access times.

- Reduces average seek time and improves throughput.

- Sits between device I/O layer and hardware in the system stack.

- Maintains I/O request queues for each device in multi-program environments.

Basic Scheduling Policies

- First-In-First-Out (FIFO):

- Processes requests in the order they arrive.

- Fair but may result in poor performance with random access patterns.

- Last-In-First-Out (LIFO):

- Processes the most recent request first.

- Reduces seek time for sequential files.

- Risk of starvation for older requests.

- Priority Scheduling:

- Assigns priority levels to requests.

- Short jobs may preempt longer ones.

- Risk of long delays for low-priority tasks.

- Shortest-Service-Time-First (SSTF):

- Selects request with the shortest seek distance.

- Efficient but can lead to starvation for distant requests.

SCAN and Its Variants

- SCAN:

- Disk head moves in one direction, servicing requests in sequence until the end.

- Then reverses direction.

- Reduces starvation and improves fairness.

- C-SCAN (Circular SCAN):

- Only scans in one direction.

- When reaching the end, returns to start without servicing requests.

- Provides uniform wait time.

- N-step-SCAN:

- Divides request queue into fixed-size segments.

- Each segment is scanned one at a time.

- Prevents a single process from monopolizing the disk.

- FSCAN:

- Uses two queues.

- One is processed while new requests are added to the other.

- Ensures fairness and prevents new requests from blocking ongoing scans.

Lecture 7: RAID #

Functions of RAID

- Combines multiple physical disks into a single logical drive.

- Distributes data using a method called striping.

- Provides redundancy for fault tolerance and performance improvement.

- Allows simultaneous I/O from multiple disks.

- Enables data recovery in case of hardware failure.

RAID 0 – Striping (No Redundancy)

- Distributes data across all disks for parallel I/O.

- Improves performance but offers no fault tolerance.

- Suitable for speed-intensive applications.

- One failure results in complete data loss.

RAID 1 – Mirroring

- Duplicates all data across two disks.

- Provides real-time backup and fault tolerance.

- Requires double the disk space.

- Ideal for critical system files.

RAID 2 – Bit-Level Striping with ECC

- Uses synchronized disks and Hamming code for error correction.

- All disks participate in each I/O.

- High reliability but rarely used due to complexity and cost.

- Replaced by modern error-correcting methods.

RAID 3 – Byte-Level Striping with Parity

- Uses one dedicated parity disk.

- Employs parallel access with small strips.

- Can reconstruct data using XOR if a disk fails.

- High data transfer rate but less efficient for multiple small I/O requests.

RAID 4 – Block-Level Striping with Dedicated Parity

- Independent access to each disk.

- One dedicated parity disk stores parity for all data blocks.

- Efficient for high I/O frequency but suffers from write bottlenecks.

RAID 5 – Block-Level Striping with Distributed Parity

- Parity blocks are distributed across all disks.

- Eliminates single parity disk bottleneck.

- Can tolerate one disk failure.

- Balanced performance and fault tolerance.

RAID 6 – Block-Level Striping with Dual Parity

- Uses two parity blocks for each data block.

- Can withstand failure of two disks.

- Excellent data availability and integrity.

- Slightly slower writes due to dual parity updates.

Lecture 8: Cache memory #

Functions of Cache Memory

- Stores frequently accessed data from main memory.

- Reduces data access latency for the processor.

- Exploits the principle of locality (temporal and spatial).

- Acts as an intermediate layer between CPU and main memory.

Cache Hierarchy

- L1 Cache: Closest to the core, fastest access, smallest size.

- L2 Cache: Slower than L1, larger capacity.

- L3 Cache: Shared among cores, largest capacity, highest latency.

- Data flows: Main Memory → L3 → L2 → L1 → Processor.

Cache Access Process

- Cache Hit: Requested data is found in the cache, returned to CPU.

- Cache Miss: Data is not found; fetched from main memory and loaded into cache.

Cache Organization

- Lines and Blocks:

- Cache consists of multiple lines, each holding a block of K words.

- Memory is block-organized; blocks are mapped into cache lines.

- Index: Identifies specific cache line.

- Tag: Portion of memory address to identify which memory block is stored.

- Control Bits: Track if data has been modified (e.g., dirty bit).

Types of Cache Misses

- Cold (Compulsory) Miss: First-time access; block not previously cached.

- Conflict Miss: Multiple data blocks map to the same cache line.

- Capacity Miss: Cache too small to hold all working data blocks.

Block Transfer & Performance

- Cache and memory transfer happens in blocks (commonly 64 bytes or more).

- Processor may start execution with only partial block loaded.

- Efficient block transfer reduces performance penalties of cache misses.

Lecture 9: Cache placement policies #

Functions of Cache Placement Policy

- Determines where a memory block should be placed in the cache.

- Answers key questions:

- Where to place a new memory block?

- How to check if data is already cached?

- Which block to replace when cache is full?

Direct-Mapped Cache

- Each memory block maps to exactly one cache block.

- Uses index bits and tag bits from the memory address.

- Efficient and simple with low power usage.

- Disadvantage: high chance of conflict; low cache hit rate.

Address Mapping in Direct Mapping

- Index: selects the cache block using mod or least significant bits.

- Tag: stores remaining bits to uniquely identify memory blocks.

- Valid bit: marks if the data in the block is valid.

- Larger blocks (e.g., 2 bytes) improve spatial locality.

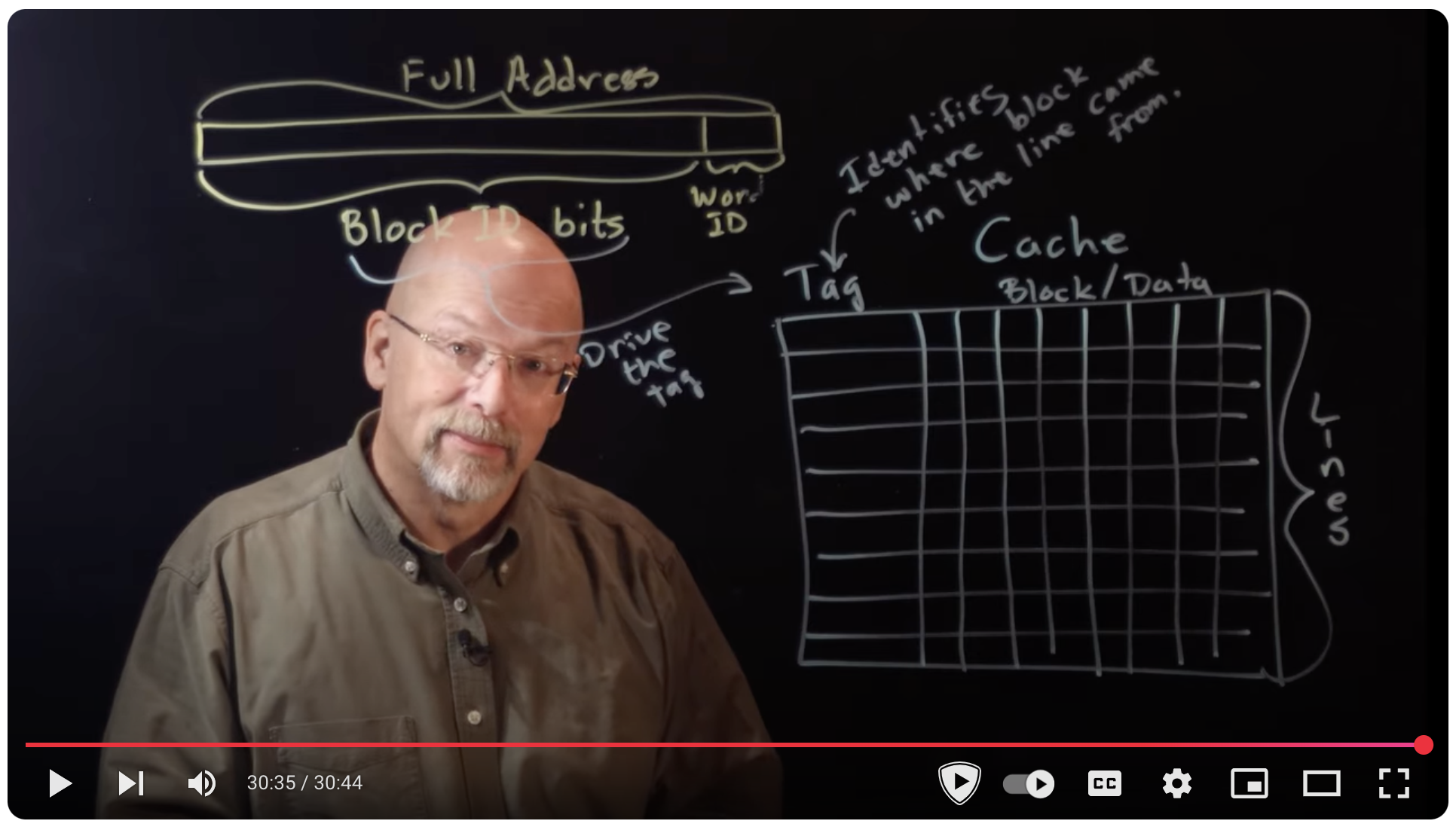

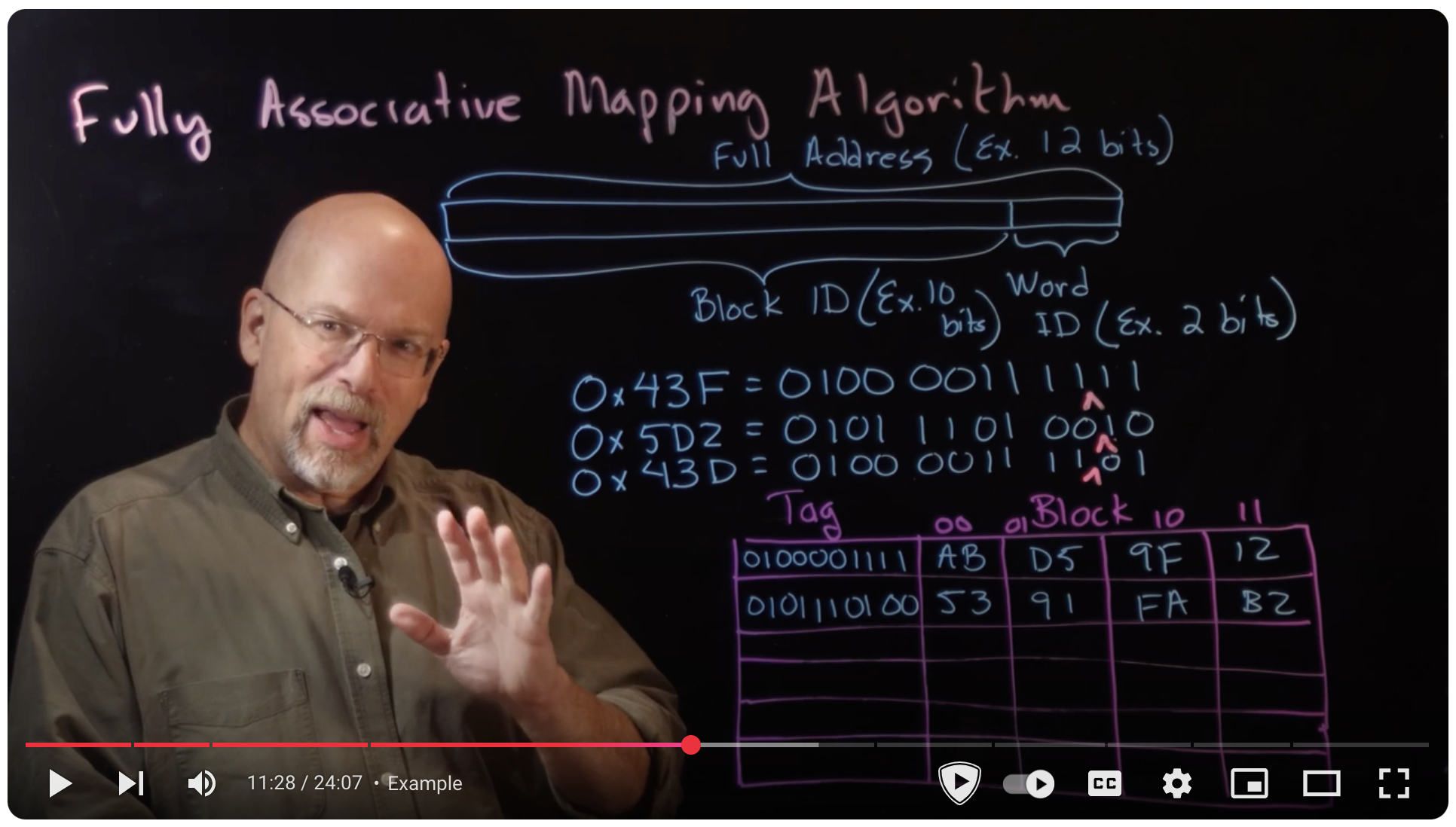

Fully Associative Cache

- Any memory block can go into any cache block.

- No fixed index, entire address is used as tag.

- Flexible and leads to high cache hit rate.

- Disadvantage: requires checking all tags → high computational overhead.

Set-Associative Cache

- Combines features of direct-mapped and fully associative caches.

- Cache divided into sets; each memory address maps to one set.

- Data can go into any block within that set.

- N-way Set Associative: each set contains N blocks.

- 2-way → 4-way → up to fully associative.

Data Lookup and Replacement

- Index bits select set; tags are compared within the set.

- Replacement policy (e.g., LRU) is applied if all blocks in a set are occupied.

- Offers a balance between flexibility and cost.

Lecture 10: Cache replacement policies #

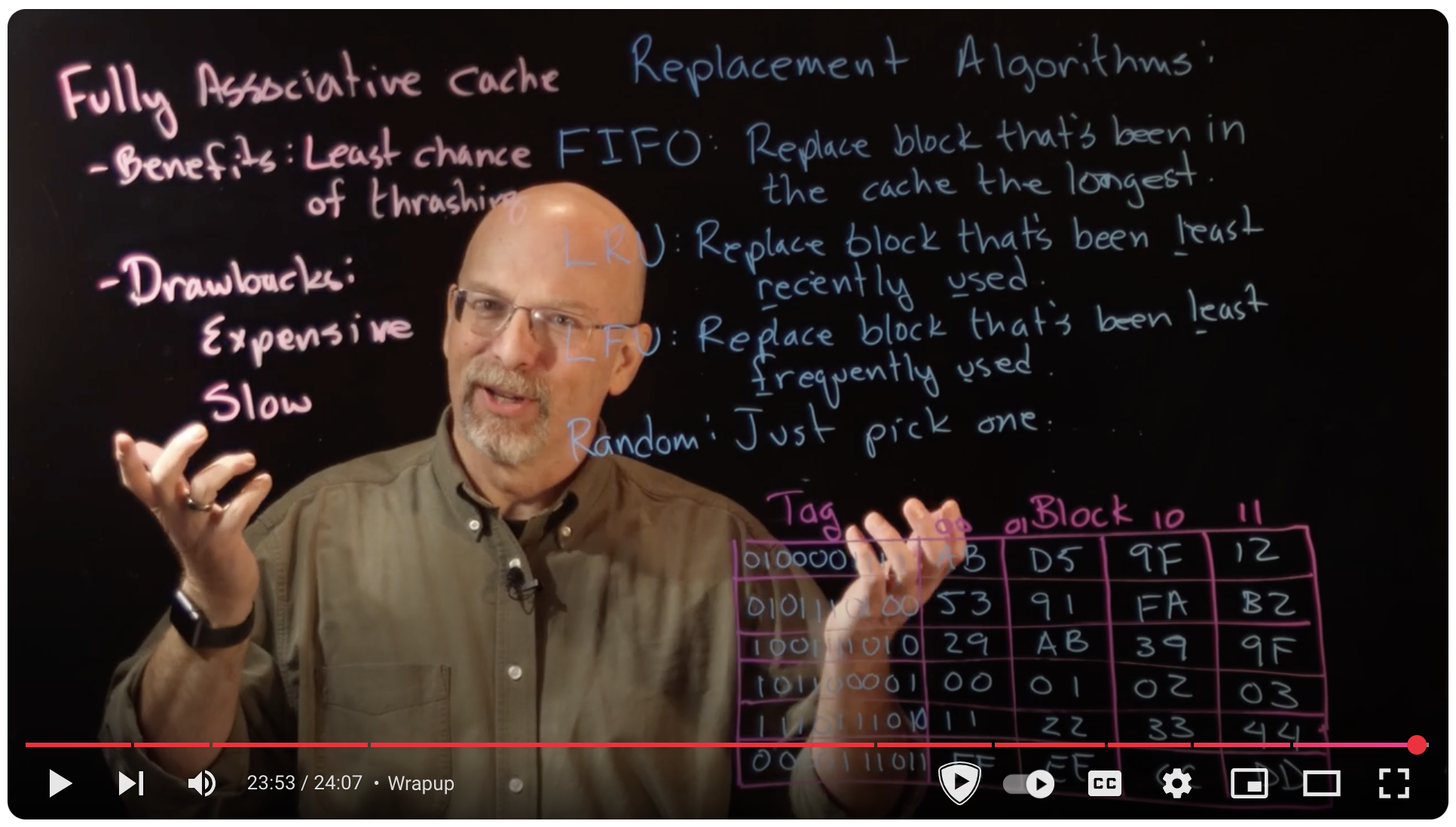

Functions of Cache Replacement Policies

- Determine which cache block to evict when the cache is full.

- Maintain cache size within its maximum capacity.

- Improve performance by keeping frequently or recently used data accessible.

- Also referred to as “eviction policies”.

Key Cache Replacement Algorithms

First-In-First-Out (FIFO)

- Evicts the oldest data in the cache.

- Simple and time-based; ignores usage frequency.

- Does not consider whether data is still actively used.

Least Recently Used (LRU)

- Removes the data that hasn’t been used for the longest time.

- Assumes recently used data is more likely to be used again.

- More effective than FIFO in many scenarios.

Least Frequently Used (LFU)

- Evicts data that has been accessed the fewest times.

- May keep recently accessed data if it’s frequently used.

- Can struggle with tracking and updating usage counts.

Most Recently Used (MRU)

- Opposite of LRU; evicts the most recently accessed data.

- Useful in certain edge cases where older data is more valuable.

- Less common than LRU or LFU.

Further material #

- An introduction to disk drive modeling | IEEE Journals & Magazine | IEEE Xplore

- An empirical analysis of the IEEE-1394 serial bus protocol | IEEE Journals & Magazine | IEEE Xplore

Week 8: Concurrency #

概要

We begin our study of concurrency by describing how to use it in practice. First, we explain where the concurrency in a system comes from and discuss the main ways to express concurrency. Then we describe the difference between ‘hard’ and ’easy’ concurrency: the latter is done by locking shared data before you touch it, the former in subtle ways that are so error-prone that simple prudence requires correctness proofs. We give the rules for easy concurrency using locks and discuss various issues that complicate the easy life: scheduling, locking granularity and deadlocks.

キーコンセプト

This topic includes:

- concurrent programming

- synchronisation

- thread-level parallelism.

レクチャーリスト

- Mutual exclusion and synchronisation

- Lecture 1: Introduction to concurrency

- Lecture 2: Mutual exclusion: Software approaches

- Lecture 3: Principles of concurrency

- Lecture 4: Mutual exclusion: Hardware support

- Lecture 5: Semaphores

- Lecture 6: Monitors

- Lecture 7: Message passing

- Lecture 8: The readers/writers problem

- Deadlock and Starvation

- Lecture 9: Principles of deadlock

- Lecture 10: Deadlock detection, avoidance and prevention

- Lecture 11: An integrated deadlock strategy

Lecture 1: Introduction to concurrency #

今週の講義で学習することの全体像の説明。

Lecture 2: Mutual exclusion: Software approaches #

Functions of Mutual Exclusion

- Prevents multiple processes from accessing shared resources simultaneously.

- Ensures consistent behavior in concurrent systems.

- Protects critical sections — code areas where shared resources are accessed.

Contexts of Concurrency

- Multiprogramming: Multiple processes share CPU time.

- Modular Programming: Complex applications broken into concurrent processes.

- Operating Systems: Often implemented as multiple concurrent threads/processes.

Requirements for Mutual Exclusion

- Only one process can be in its critical section at a time.

- A process halted outside its critical section must not interfere with others.

- No deadlock or starvation should occur when requesting access.

- If no process is in the critical section, one requesting should be granted access promptly.

- A process should spend only a limited time in the critical section.

Key Concepts

- Critical Section: Code that accesses shared resources.

- Entry Section: Checks and waits for access to the critical section.

- Exit Section: Releases the critical section and notifies others.

- Atomic Action: Execution of the critical section is complete and uninterrupted.

Issues in Multithreading

- Thread Interference: Concurrent threads interleave operations on shared data.

- Memory Consistency Errors: Threads have inconsistent views of shared memory.

Software Solutions

- Dekker’s Algorithm:

- Uses flags and a turn variable to alternate access.

- Complex but provides strict mutual exclusion.

- Peterson’s Algorithm:

- Simpler and more elegant.

- Uses an array of flags and a turn variable.

- Widely studied and used in educational settings.

Lecture 3: Principles of concurrency #

Functions of Concurrency

- Enables interleaved or overlapping execution of processes.

- Improves CPU utilization and system efficiency.

- Occurs in single-processor (interleaving) and multiprocessor (parallel) systems.

Key Concepts

- Critical Resource: A shared resource requiring exclusive access.

- Critical Section: Part of the code that accesses a critical resource.

- Thread Switching: OS may pause/resume threads mid-instruction.

- Shared Mutable State: A variable shared between threads that can be modified.

Concurrency Issues

- Race Conditions:

- Occur when outcome depends on the timing/order of thread execution.

- Example: Two threads increment a shared variable

isimultaneously, causing incorrect results.

- Read-Modify-Write Sequence:

- A common pattern in critical sections.

- Must be atomic to prevent interference.

- Thread Interruption:

- Threads can be paused mid-update, leading to inconsistent states.

- Context switching can interrupt one thread and allow another to operate on outdated data.

Example

- Thread 1 and Thread 2 both read and increment variable

i. - If Thread 1 is interrupted before writing back, and Thread 2 updates

i, Thread 1 may overwrite Thread 2’s result. - Final value depends on execution order → race condition.

OS Responsibilities in Concurrency

- Track active processes via process control blocks (PCBs).

- Allocate/deallocate resources fairly (CPU time, memory, files, I/O).

- Protect process memory and resources from unintended access.

- Ensure process output is independent of execution speed.

Lecture 4: Mutual exclusion: Hardware support #

Functions of Hardware Support for Mutual Exclusion

- Enables safe concurrent access to shared resources.

- Provides low-level mechanisms that enforce atomicity.

- Complements software-based synchronization in both uniprocessor and multiprocessor systems.

Interrupt Disabling (Uniprocessor Systems)

- Prevents context switches by disabling interrupts during critical section execution.

- Guarantees mutual exclusion in single-processor systems.

- Simple and effective but:

- Cannot be used in multiprocessor environments.

- Reduces system responsiveness and efficiency.

Limitations in Multiprocessor Systems

- Multiple processors can access shared memory simultaneously.

- Disabling interrupts on one CPU does not affect others.

- Requires hardware-based synchronization primitives.

Hardware Atomic Instructions

- Special machine instructions enforce mutual exclusion by:

- Performing multiple actions (e.g., read + write) atomically.

- Locking memory location during instruction execution.

- Examples: Test-and-Set, Compare-and-Swap.

Advantages

- Works on both single and multiprocessor systems.

- Simple, fast, and easy to verify.

- Supports multiple independent critical sections via different control variables.

Disadvantages

- Busy waiting wastes CPU time while waiting for resource access.

- Starvation may occur if some processes are continually bypassed.

- Risk of deadlock when high-priority processes block low-priority ones holding a resource.

Lecture 5: Semaphores #

Functions of Semaphores

- Used for process synchronization and signaling.

- Help enforce mutual exclusion.

- Prevent race conditions by controlling access to shared resources.

Types of Semaphore Operations

- semWait(s):

- Decrements the semaphore

s. - If

sbecomes negative, the process is blocked.

- Decrements the semaphore

- semSignal(s):

- Increments the semaphore

s. - If the result is ≤ 0, a blocked process (if any) is unblocked.

- Increments the semaphore

Types of Semaphores

- Counting Semaphore:

- Holds a non-negative integer value.

- Supports multiple resource instances.

- Used when multiple processes can access a limited resource concurrently.

- Binary Semaphore:

- Holds only 0 or 1 (like a lock).

- Used for mutual exclusion.

- If

s = 0,semWaitblocks the process. - If

s = 1,semWaitsetss = 0and proceeds. semSignalsetss = 1or unblocks a waiting process.

Queue Behavior

- Strong Semaphore:

- Uses FIFO queue.

- Longest-waiting process is released first.

- Guarantees freedom from starvation.

- Weak Semaphore:

- No specific order for unblocking.

- May lead to starvation.

Mutual Exclusion via Semaphores

- Only one process can manipulate a semaphore at a time.

- Prevents simultaneous modification of shared resources.

- Can use:

- Software-based methods (e.g., Dekker’s, Peterson’s) – high overhead.

- Hardware-based methods (e.g., atomic instructions, interrupt disabling) – more efficient.

Lecture 6: Monitors #

Functions of Monitors

- A high-level synchronization construct for managing concurrent threads.

- Ensures mutual exclusion and coordination between threads.

- Encapsulates shared data and the procedures that access them.

Monitor Structure

- Contains:

- Local data variables (accessible only within the monitor).

- Initialization code.

- One or more procedures (monitor regions).

- Only one thread may execute inside a monitor at a time.

- Threads must acquire the monitor before executing its procedures.

Mutual Exclusion and Cooperation

- Mutual Exclusion:

- Only one thread can execute within a monitor region at a time.

- Protects shared resources from race conditions.

- Cooperation:

- Threads work together by waiting for and signaling conditions.

- Example: A reader thread waits for data to be written by a writer thread.

Condition Variables

- Special variables used for thread synchronization inside a monitor.

- Two key operations:

C.wait():- Suspends the calling thread.

- Releases the monitor for other threads.

C.signal():- Wakes up one thread waiting on the condition

C, if any.

- Wakes up one thread waiting on the condition

Monitor Behavior (Analogy)

- Monitor Entry Set = Hallway (threads waiting to enter monitor).

- Wait Set = Waiting Room (threads waiting on a condition).

- Critical Section / Exclusive Room = Room where only one thread is allowed.

- Scheduler = Chooses which thread can enter the monitor next.

Thread Actions in Monitor

- Entering the monitor = Entering the building.

- Acquiring the monitor = Entering the exclusive room.

- Releasing the monitor = Leaving the exclusive room.

- Waiting on a condition = Going to the waiting room.

- Being signaled = Returning from waiting room to exclusive room.

Lecture 7: Message passing #

Functions of Message Passing

- Facilitates communication and synchronization between processes.

- Useful in single systems, multiprocessor systems, and distributed systems.

- Can be used to implement mutual exclusion.

Message Passing Primitives

send(destination, message)receive(source, message)- Sender may wait (blocking) or proceed (non-blocking).

- Receiver may wait for message or skip if none is available.

Synchronization Requirements

- Receiver cannot receive before a message is sent.

- Synchronization ensures both sender and receiver are coordinated.

Addressing Mechanisms

- Direct Addressing:

- Sender specifies the recipient directly.

- Receiver may specify the sender or receive from any.

- Indirect Addressing:

- Uses mailboxes (message queues) shared among processes.

- Allows decoupling of sender and receiver.

- Supports one-to-one, one-to-many, many-to-one, and many-to-many models.

Message Structure

- Messages may be fixed or variable in length.

- Typically divided into:

- Header: Contains metadata (source, destination, type, etc.)

- Body: Contains actual message data.

- May include fields for:

- Message type

- Sequence number

- Priority

- Links (for message chaining)

Queuing Policy

- Default: FIFO (First-In-First-Out).

- Optionally: priority-based or custom selection criteria by receiver.

Mutual Exclusion via Message Passing

- Shared mailbox initialized with a single token-like message.

- Process must receive the message to enter its critical section.

- After executing, the process sends the message back to the mailbox.

- Ensures only one process enters the critical section at a time.

Lecture 8: The readers/writers problem #

The Readers/Writers Problem

- A classic synchronization problem involving shared data access.

- Readers: Only read the data; multiple readers can read simultaneously.

- Writers: Modify the data; only one writer can access at a time.

- No readers are allowed while a writer is writing.

- Writers must exclude all other readers and writers.

Mutual Exclusion Strategy

- The section of code that accesses shared data is considered a critical section.

- Writers require full mutual exclusion.

- Readers do not need to exclude other readers.

Semaphore-Based Solutions

- Semaphores are used to enforce mutual exclusion for both readers and writers.

- Writers gain exclusive access: block both readers and other writers.

- Readers share access: multiple readers may access simultaneously if no writer is active.

Potential Issues

- Starvation of Writers:

- If readers continuously access the data, writers may be blocked indefinitely.

- This occurs if new readers are always allowed in while writers are waiting.

Solutions to Prevent Starvation

- Block New Readers:

- When a writer indicates intent to write, prevent new readers from entering.

- Existing readers complete, then writer gets access.

- Priority Messaging System:

- A controller process manages access requests via message passing.

- Three mailboxes handle different message types (e.g., read request, write request).

- Writer requests are prioritized by the controller using message metadata.

Lecture 9: Principles of deadlock #

Definition of Deadlock

- A permanent blocking of a set of processes.

- Occurs when each process is waiting for an event that can only be triggered by another blocked process.

- Common in systems where processes compete for shared resources.

Example

- Thread 1 locks Resource A and waits for Resource B.

- Thread 2 locks Resource B and waits for Resource A.

- Both threads are blocked by each other — a deadlock.

Necessary Conditions for Deadlock

- Mutual Exclusion:

- Only one process can use a resource at a time.

- Shared resources cannot be simultaneously accessed.

- Hold and Wait:

- A process holding at least one resource is waiting to acquire additional resources held by others.

- No Preemption:

- Resources cannot be forcibly taken from processes; must be released voluntarily.

- Circular Wait:

- A circular chain of processes exists, each waiting on a resource held by the next.

Lecture 10: Deadlock detection, avoidance and prevention #

Deadlock Strategies Overview

- No single strategy works in all situations.

- Three main approaches:

- Detection

- Avoidance

- Prevention

Deadlock Detection

- Resources are granted freely when available.

- System periodically checks for circular wait conditions.

- Detection uses a request matrix:

- Tracks resource requests per process.

- Marks processes that can finish and release resources.

- Unmarked rows at the end = deadlocked processes.

Deadlock Prevention

- Prevents one or more of the necessary conditions for deadlock:

- Mutual Exclusion:

- Allow shared access where possible (e.g., read-only).

- Hold and Wait:

- Require processes to request all needed resources at once.

- Inefficient and often unrealistic.

- No Preemption:

- Force processes to release resources before making new requests.

- Requires rollback or recovery mechanisms.

- Circular Wait:

- Impose a strict ordering on resource acquisition.

- Mutual Exclusion:

Deadlock Avoidance

- Allows all conditions for deadlock but avoids unsafe states.

- Makes dynamic decisions to avoid reaching a deadlock.

- Two approaches:

- Do not start a process if it might eventually cause deadlock.

- Do not grant a resource request if it might lead to deadlock later.

- Allows more concurrency than prevention.

Lecture 11: An integrated deadlock strategy #

Integrated Deadlock Strategy

- Combines multiple techniques (prevention, avoidance, detection).

- Resources are grouped into resource classes.

- Circular wait between classes is prevented via linear resource ordering.

- Within each class, different strategies may be applied.

Swappable Space

- Prevention strategy: All required resources must be allocated at once.

- Effective if maximum resource needs are known in advance.

- Related to hold-and-wait prevention.

- Avoidance is also possible if requirements are declared early.

Process Resources

- Avoidance strategy is effective: processes declare required resources ahead of time.

- Prevention by ordering is also applicable.

- Suitable for resources like open files or I/O devices.

Main Memory

- Prevention via preemption:

- A process can be swapped out to secondary memory.

- Frees space to resolve potential deadlocks.

Internal Resources

- Prevention via resource ordering:

- Ensures resources are requested in a fixed global order.

- Helps prevent circular wait conditions.

Week 9: Protection and security #

概要

In this topic we provide an overview of security threats. We begin with a discussion of what we mean by computer security. Computer security deals with computer related assets that are subject to a variety of threats and for which various protection measures are taken. We examine two broad categories of computer and network security threats: intruders and malicious software. Cryptographic algorithms, such as encryption and hash functions, play a role both in computer security threats and computer security techniques.

キーコンセプト

This topic includes:

- security threats

- viruses

- worms

- bots

- rootlet

- cryptographic algorithms.

レクチャーリスト

- Computer security

- Lecture 1: Introduction to protection and security

- Lecture 2: Threats, attacks and assets

- Lecture 3: Intruders

- Malicious Software

- Lecture 4: Viruses, worms and bots

- Lecture 5: RootkitsPage

- Lecture 6: Encryption

Lecture 1: Introduction to protection and security #

今週の講義で学習することの全体像の説明。

Lecture 2: Threats, attacks and assets #

Threats, Attacks, and Assets

- The OS plays a key role in ensuring system security and reliability.

- A reliable OS must prevent data loss from crashes or unauthorized access.

User Accounts and Privileges

- Users are assigned accounts with:

- Username and password

- Privileges defined by an administrator

- Administrators can:

- Modify system settings

- Manage users and privileges

- Install or remove software

Auditing and Monitoring

- Auditing software logs:

- Failed login attempts

- Suspicious activity

- Potential sniffing software

- Helps detect unauthorized access or system misuse

Types of Attacks

- Internal Attacks

- Authorized users elevate privileges or bypass access controls

- Memory or file managers may be tricked into exposing protected resources

- External Attacks

- Remote login abuse

- Brute-force password attacks

- Hacking tools available for purchase make attacks easier

CPU-Level Security

- Modern CPUs support privilege levels:

- Privileged Mode: full instruction set, memory/register access

- Non-Privileged Mode: limited instruction set

- Memory protection: upper/lower bounds restrict access

- Privileged instructions are restricted to protect system integrity

Lecture 3: Intruders #

Intruders

- Most computers today are connected to networks, especially the internet.

- This exposes systems to external intruders and various forms of cybercrime:

- Vandalism

- Denial-of-service (DoS) attacks

- Intellectual property theft

Targets of Attack

- Attacks typically target:

- Software vulnerabilities

- Hardware components

- End users (considered weak links)

Evolving Threat Landscape

- Earlier threats included worms (e.g., CodeRed).

- Modern concerns focus on:

- Spyware

- Trojan horses

- Botnets

- The internet is now the primary attack vector.

Goals of Intruders

- Gain unauthorized access to systems

- Escalate privileges

- Steal credentials (e.g., password databases)

Severity of Intrusions

- Benign: Curious individuals exploring systems

- Malicious: Reading/modifying data or disrupting operations

Types of Intruders

- Masquerader

- External attacker

- Gains access via stolen credentials or bypassing authentication

- Misfeasor

- Internal legitimate user

- Abuses existing privileges to access unauthorized resources

- Clandestine User

- Insider or outsider

- Gains administrative control and disables security mechanisms (e.g., auditing)

Lecture 4: Viruses, worms and bots #

Types of Malware Attacks

- Malware can be:

- Local (installed like regular software)

- Remote (executed from another computer over a network)

Local Software Attacks

- Virus

- Infects host programs

- Executes with host

- May replicate or cause damage (e.g., data corruption)

- Worm

- Autonomous program that spreads via networks

- Replicates rapidly, consuming resources

- Can significantly degrade system/network performance

- Trojan Horse

- Disguised as a legitimate program

- Lies dormant until triggered (date or signal)

- Often spread via email attachments

- Spyware

- Collects and transmits user activity data

- Used for:

- Tracking keypresses (passwords, credit cards)

- Building user profiles

- Corporate/industrial espionage

Remote Software Attacks

- Denial of Service (DoS)

- Floods a system (e.g., web server) with requests

- Overloads memory and crashes system

- Distributed Denial of Service (DDoS)

- DoS attack launched from multiple compromised machines (botnet)

- Botnets may be rented out for profit

Phishing

- Social engineering attack via email

- Poses as a legitimate institution to request sensitive info

- Often used to steal:

- Personal information

- Login credentials

- Financial data

Lecture 5: RootkitsPage #

What is a Rootkit?

- A type of malware that provides unauthorized admin-level access to a device.

- Operates near or within the OS kernel, hiding its presence.

- Can target software, firmware, and even hardware.

Installation Methods

- Exploiting vulnerabilities (unpatched OS/software)

- Bundled malware (infected PDFs, pirated media, shady apps)

- Phishing attacks (user unknowingly installs malware)

Rootkit Capabilities

- Hide other tools (e.g. keyloggers)

- Launch DDoS attacks or send spam

- Disable antivirus and security patches

- Provide persistent, stealthy access

Types of Rootkits

- Hardware/Firmware Rootkits

- Target BIOS, routers, or hard drives

- Hard to detect and remove

- Bootloader Rootkits

- Replace OS bootloader

- Load before OS starts

- Memory Rootkits

- Reside in RAM, vanish on reboot

- Affect performance and remain stealthy

- Application Rootkits

- Replace or alter standard programs (e.g. Notepad, Paint)

- Hard to detect by users, often caught by antivirus

- Kernel-Mode Rootkits

- Modify OS kernel functions

- Extremely dangerous; deep system control

- Virtual Rootkits

- Host the OS as a virtual machine

- Avoid modifying the actual OS directly

Famous Example

- Stuxnet (2010)

- Worm that targeted Iranian nuclear facilities

- Likely state-sponsored (believed to be U.S. and Israel)

- Highlighted the use of rootkits in cyber warfare

Lecture 6: Encryption #

Encryption: An Overview

Cryptography vs Encryption

- Cryptography: Study of secure communication.

- Encryption: A cryptographic tool used to encode data so unauthorized parties can’t understand it.

Why Encryption Matters

- Traditional methods like passwords are vulnerable to attacks.

- Encryption ensures confidentiality, even if data is intercepted.

HTTPS and Secure Communication

- HTTP → HTTPS: Secure version using TLS (Transport Layer Security).

- TLS succeeded SSL (Secure Sockets Layer).

- Browsers show a padlock icon to indicate secure TLS communication.

Types of Encryption

- Symmetric-Key Encryption

- Uses a single key for both encryption and decryption.

- Faster but requires secure key sharing.

- Used in HTTPS, SSL, and securing local data.

- Public-Key Encryption (Asymmetric)

- Uses two keys:

- Public Key: Used to encrypt data.

- Private Key: Used to decrypt data.

- Public key is shared, private key is kept secret.

- Adds security for data transmission.

- Uses two keys:

Certificates and Trust

- Challenge: Making sure the public key belongs to the right party.

- Solution: Certificates issued by Certificate Authorities (CAs).

- Validate ownership of keys.

- Web browsers are shipped with trusted CAs.

- Users can inspect certificates of websites.

Further material #

- What is Phishing | Barracuda Campus

Week 9: Multiple processor systems, network programming and the internet #

概要

We study how computers are linked together to share information and resources. Our study will include the construction and operation of networks, applications of networks, multiple processor and multi-core systems. We will also discuss the role of the graphics processing unit (GPU) in modern computing environments.

キーコンセプト

This topic includes:

- multiple processor systems

- network programming

- the internet

- The world wide web

- internet protocols

- client-server.

レクチャーリスト

- Multiple processor systems (MPS)

- Lecture 1: Introduction to multiple processor systems, network programming and the internet

- Lecture 2: Multiprocessor and multicore scheduling

- Lecture 3: Multicore computers – Hardware and software performance

- Computer networks

- Lecture 4: Network fundamentals

- Lecture 5: The internet

- Lecture 6: Internet protocols

Lecture 1: Introduction to multiple processor systems, network programming and the internet #

今週の講義で学習することの全体像の説明。

Lecture 2: Multiprocessor and multicore scheduling #

Multiprocessor and Multicore Scheduling

- Most modern systems contain multiple processors, requiring specialized scheduling strategies.

Types of Multiprocessor Systems

- Loosely Coupled: Each processor has separate memory and I/O channels.

- Functionally Specialized: A general-purpose processor is assisted by embedded specialized processors.

- Tightly Coupled: Processors share main memory and are managed by the operating system.

Granularity in Parallelism

- Granularity refers to the ratio of computation time to communication time.

- It affects scheduling efficiency and system performance.

Categories of Parallelism by Granularity

- Independent Parallelism: No synchronization; similar to multiprogramming on a uniprocessor.

- Coarse and Very Coarse-grained: Some synchronization; requires minimal software changes.

- Medium-grained: Threads within a single process; requires explicit coordination.

- Fine-grained: Many small tasks; enables load balancing but increases overhead.

Levels of Parallelism

- Instruction Level: Approximately 20 instructions.

- Loop Level: Approximately 500 instructions.

- Procedure Level: A few thousand instructions.

- Application Level: Tens of thousands of instructions.

Design Considerations for Scheduling

- Process Assignment: Can be dynamic or dedicated; affects scheduling overhead.

- Multiprogramming on Processors: Enables better utilization and performance.

- Process Dispatching: Simpler methods like FIFO may be sufficient for systems with few processors.

Thread Usage in Multiprocessor Systems

- Threads enable concurrent execution in shared memory space.

- Thread switching is cheaper than process switching.

- Parallel execution of threads across processors improves performance.

Multiprocessor Scheduling Strategies

- Load Sharing: Global ready queue; idle processors pick threads from it.

- Gang Scheduling: Related threads are scheduled to run simultaneously on dedicated processors.

- Dedicated Processor Assignment: Fixed allocation of processors to threads for the entire program duration.

- Dynamic Scheduling: The number of threads may change during execution.

Multicore Thread Scheduling

- Similar to multiprocessor scheduling.

- Operating systems like Windows and Linux perform load balancing.

- Standard strategies may not fully utilize multicore performance.

Lecture 3: Multicore computers – Hardware and software performance #

Multicore Computers and Real-Time Scheduling

- Real-time computing is increasingly important, especially for systems responding to external events.

Real-Time Tasks

- Hard Real-Time Tasks: Must meet deadlines; failure can cause fatal errors.

- Soft Real-Time Tasks: Preferable to meet deadlines, but not mandatory.

- Periodic Tasks: Occur at regular intervals.

- Aperiodic Tasks: Occur irregularly, with specific start/finish deadlines.

Real-Time Operating System Characteristics

- Determinism: Ability to perform operations at predefined times. Depends on interrupt handling speed and system capacity.

- Responsiveness: Time taken to acknowledge and process an interrupt. Affected by interrupt handling and system hardware.

- Response Time: Combination of determinism and responsiveness, critical for meeting timing requirements.

- User Control: Users can adjust task priorities, memory usage, and scheduling policies.

- Reliability: Essential for real-time systems; failures can lead to serious consequences (e.g., in aviation or medical systems).

- Fail-Soft Operation: System should continue operation with minimal loss and maintain stability for critical tasks.

Real-Time Scheduling Strategies

- Static Table-Driven Approaches: Predefined execution schedules created via static analysis.

- Static Priority-Driven Preemptive Approaches: Priorities are assigned via static analysis; no fixed schedule created.

- Dynamic Planning-Based Approaches: Feasibility is analyzed at runtime before accepting a task.

- Dynamic Best-Effort Approaches: No feasibility check; system attempts to meet all deadlines and aborts missed ones.

Lecture 4: Network fundamentals #

Networks and Network Communication

- Networks allow data and resource sharing among multiple users and computers.

- Network software has evolved from simple utilities to complex communication systems supporting billions of devices.

Types of Networks

- Personal Area Network (PAN): Short-range connections (e.g., wireless keyboard/mouse).

- Local Area Network (LAN): Connects computers within a building (e.g., offices, file and printer sharing).

- Metropolitan Area Network (MAN): Covers a community or city-sized area.

- Wide Area Network (WAN): Covers large geographical distances (e.g., the Internet).

Network Topologies

- Bus Topology: All devices connected to a single communication line (logical bus).

- Star Topology: Devices connected to a central node (access point).

- The distinction lies in whether communication is direct or mediated by a central device.

Protocols

- Protocols define rules for network communication.

- Ethernet-based bus networks use CSMA/CD (Carrier Sense Multiple Access with Collision Detection).

- Devices listen before transmitting and handle collisions with random delays.

Combining Networks

- Repeaters: Extend signals across long buses.

- Bridges: Forward messages based on destination addresses; filter unnecessary traffic.

- Switches: Multi-port bridges connecting several buses like wheel spokes.

Process Communication

- Inter-Process Communication (IPC): Processes exchange data to coordinate.

- Client-Server Model: Clients request services from a server (e.g., print servers).

- Peer-to-Peer (P2P) Model: Processes act as both service providers and consumers (e.g., messaging, games).

Distributed Systems

- Cluster Computing: Independent computers work together like a supercomputer.

- Grid Computing: Loosely coupled systems using idle computing resources (e.g., scientific projects).

- Cloud Computing: Virtualized, on-demand computing resources (e.g., AWS EC2).

Lecture 5: The internet #

The Internet

- The Internet (capital I) is a global system of interconnected networks that originated from 1960s research.

- Its purpose was to enable communication across networks and maintain connectivity despite local disruptions.

Internet Structure and ISPs

- The Internet is organized hierarchically through Internet Service Providers (ISPs).

- Tier 1 ISPs: High-speed, global backbones of the Internet.

- Tier 2 ISPs: Regional providers connected to Tier 1.

- Tier 3 (Access) ISPs: Provide connections to individual homes and businesses.

- End systems or hosts (e.g., PCs, smartphones) connect to the Internet through access ISPs.

IP Addressing and Domain Names

- Devices on the Internet are assigned unique IP addresses (e.g., 192.168.0.1).

- IP address allocation is managed by ICANN and distributed to ISPs in blocks.

- Domain names offer a human-readable alternative to IPs and must be registered through ICANN-approved registrars.

- DNS (Domain Name System) translates domain names into IP addresses via name servers.

- A DNS lookup is used to resolve a domain name to an IP address.

Internet Applications

- Early applications included email, basic web browsing, and text messaging.

- Email uses applications like Outlook or Thunderbird and is transmitted using network protocols.

- VoIP (Voice over Internet Protocol) allows voice communication over the Internet (e.g., Skype, Zoom).

- Streaming audio and video (e.g., Netflix) now dominates Internet traffic.

Streaming Methods

- Unicast: One sender transmits data to one receiver.

- En-unicast: One sender transmits to many receivers individually.

- Multicast: One sender sends a single message to multiple clients via special routing.

- Most media services use OnDemand unicast streaming.

Content Delivery Networks (CDNs)

- CDNs are distributed server networks that store and deliver media closer to end users.

- They reduce latency and improve scalability for large-scale streaming platforms.

Lecture 6: Internet protocols #

Internet Protocols

- Internet communication is managed through a four-layer hierarchy: application, transport, network, and link layers.

- Each layer consists of software responsible for different stages of message transmission.

Application Layer

- Contains software (clients/servers) for tasks like file transfers and media playback (e.g., FTP).

- Uses DNS to translate domain names to IP addresses.

- Sends and receives messages through the transport layer.

Transport Layer

- Ensures proper message formatting and breaks long messages into packets.

- Adds sequence numbers for reassembly at the destination.

- Passes packets to the network layer for routing.

- Receives packets from the network layer and reconstructs the original message.

- Uses port numbers to direct messages to appropriate application software.

Network Layer

- Decides packet routing based on forwarding tables.

- Determines the next hop and passes packets to the link layer.

- Recognizes when packets reach their destination and passes them to the transport layer.

Link Layer

- Handles the physical and data link aspects of transmission.

- Responsible for the actual transfer of packets across physical network hardware.

Port Numbers and Conventions

- Port numbers help identify which application should receive data (e.g., port 25 for SMTP email).

- Applications listen on specific ports to receive relevant packets.

TCP/IP Protocol Suite

- Collection of standards supporting internet communication.

- Includes protocols like TCP (Transmission Control Protocol) and IP (Internet Protocol).

TCP vs UDP

- TCP (Reliable Protocol):

- Connection-oriented.

- Ensures delivery with acknowledgments and packet retransmissions.

- Suitable for applications where reliability is crucial (e.g., email, web).

- UDP (Unreliable Protocol):

- Connectionless and faster.

- No acknowledgment or retransmission.

- Suitable for low-latency applications (e.g., online gaming, streaming).

Use Case: UDP in Gaming

- Player data (e.g., position, appearance) is sent rapidly to a central server.

- Missed packets are acceptable due to high frequency.

- Server-side prediction may be used to compensate for dropped packets.

Further material #

- Intro to CUDA - An introduction, how-to, to NVIDIA’s GPU parallel programming architecture - YouTube

- Vint Cerf - INTERNET HALL of FAME PIONEER - YouTube

Week 11, 12 #

最終課題の期間。課題はプログラムの実装とレポートの提出。

- Rust 言語を使用して、キャッシュメモリをシミュレートするプログラムを実装する。

- 実装内容を詳述したレポートを最大 2,000 ワードで記述して提出する。

Write a cache simulator with source code which takes a memory trace file as input, simulates the hit/miss behaviour of a cache memory on this trace, and outputs the total number of hits, misses, and evictions.

まず、課題で何を実装するよう求められているかの理解が少々難解。その理解のためには以下の動画が参考になる。

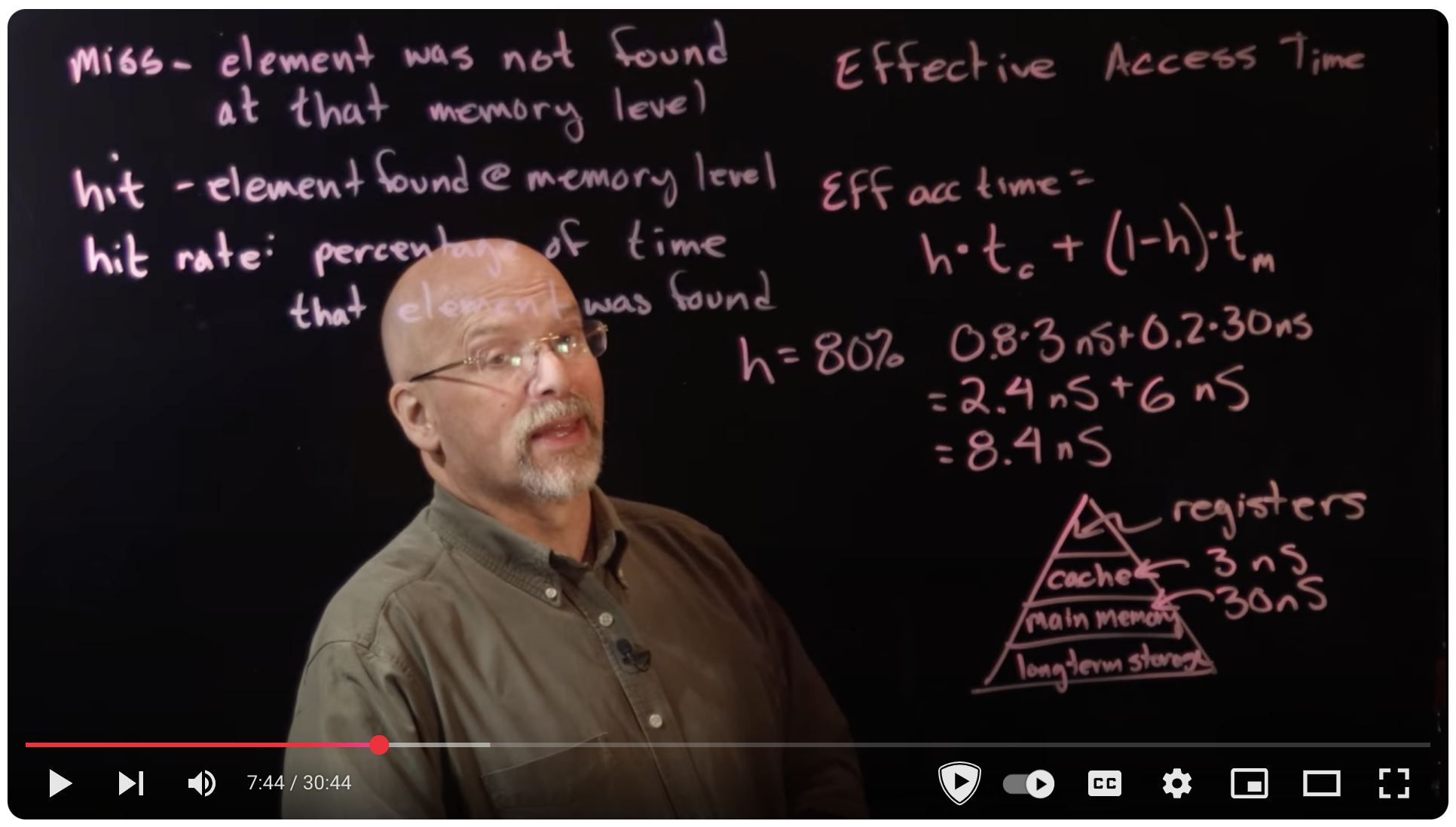

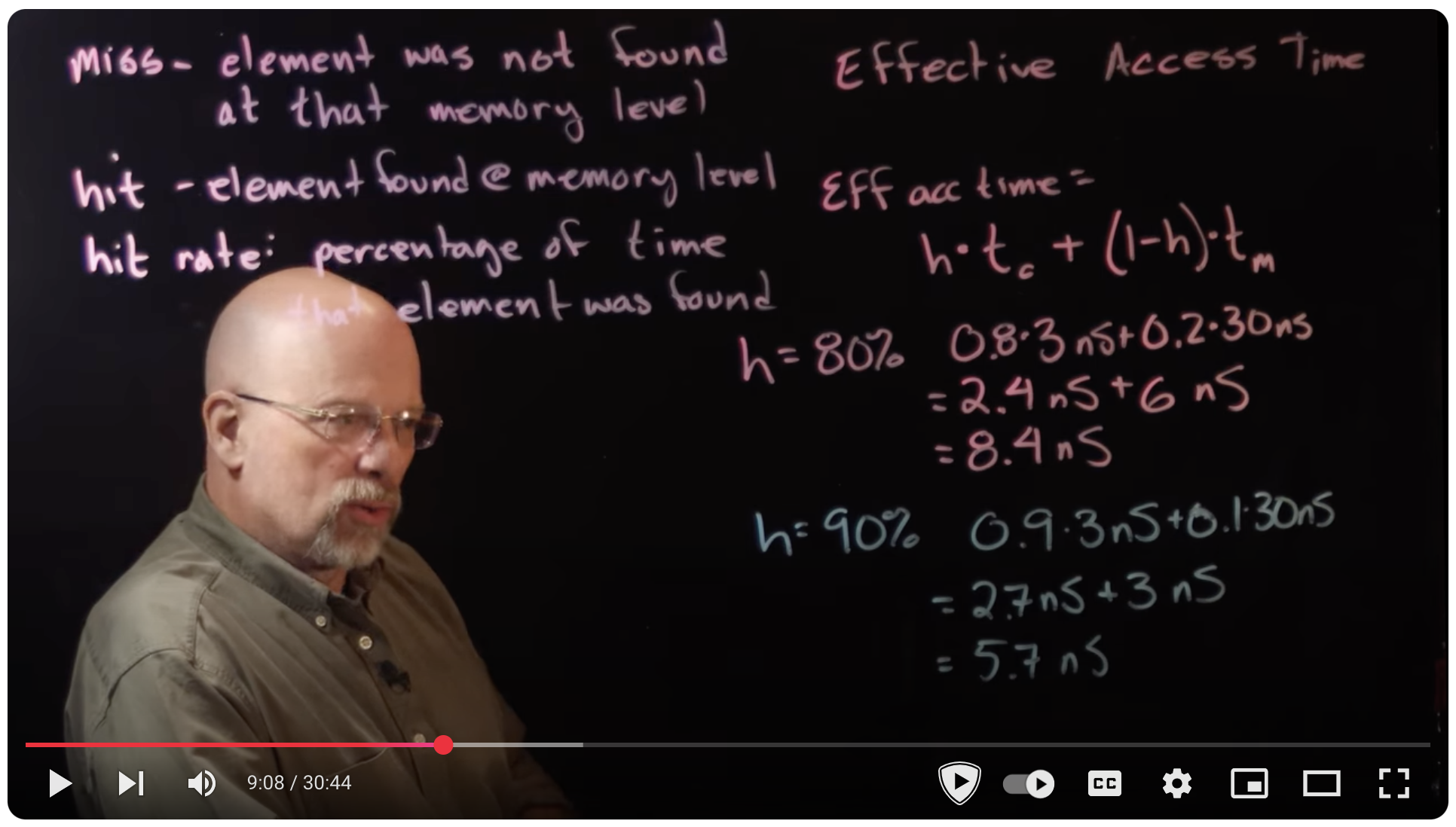

- Ep 073: Introduction to Cache Memory - YouTube

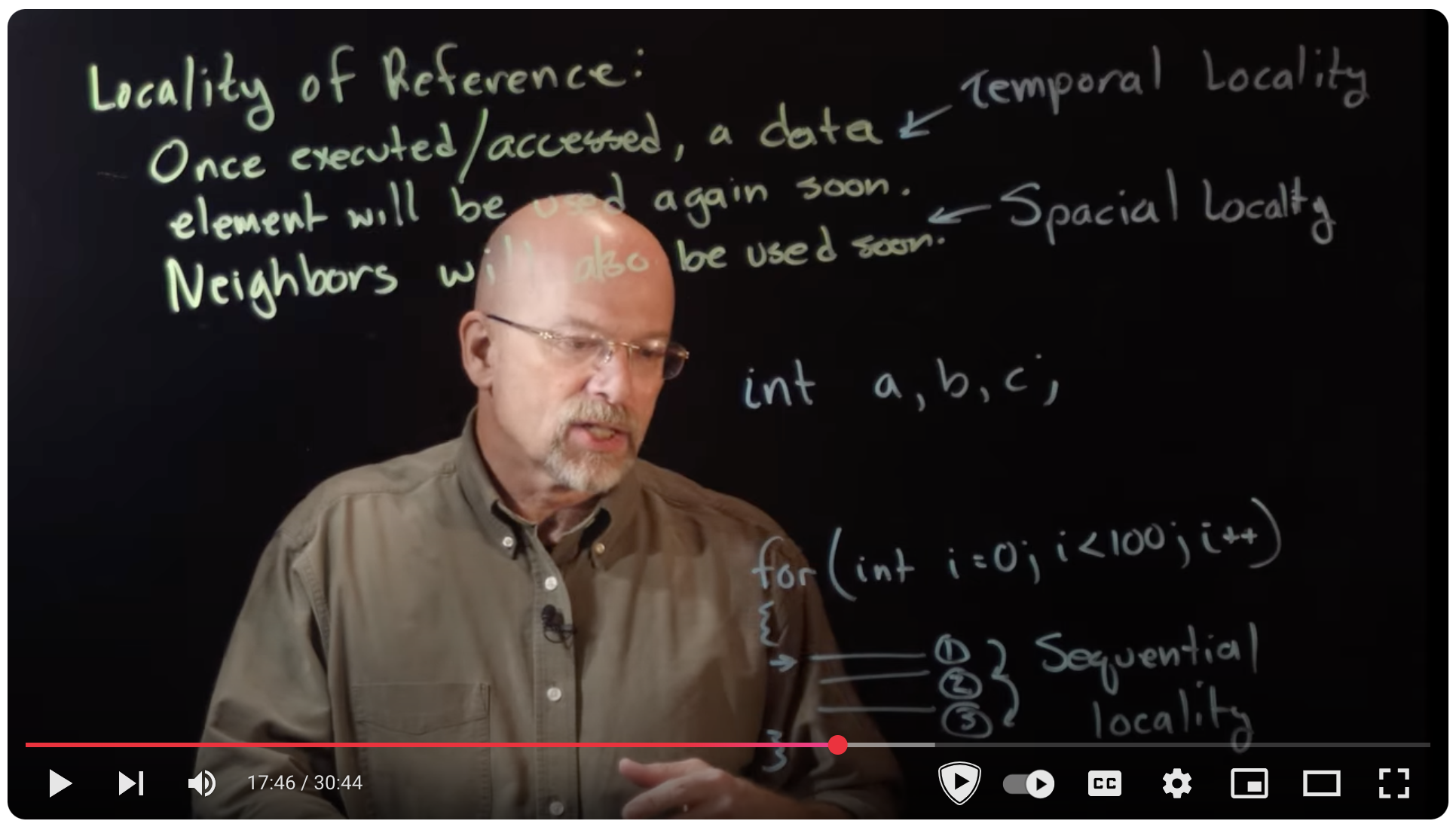

- Ep 074: Fully Associative Caches and Replacement Algorithms - YouTube

- Ep 075: Direct Mapped Caches - YouTube

Ep 073: Introduction to Cache Memory - YouTube #

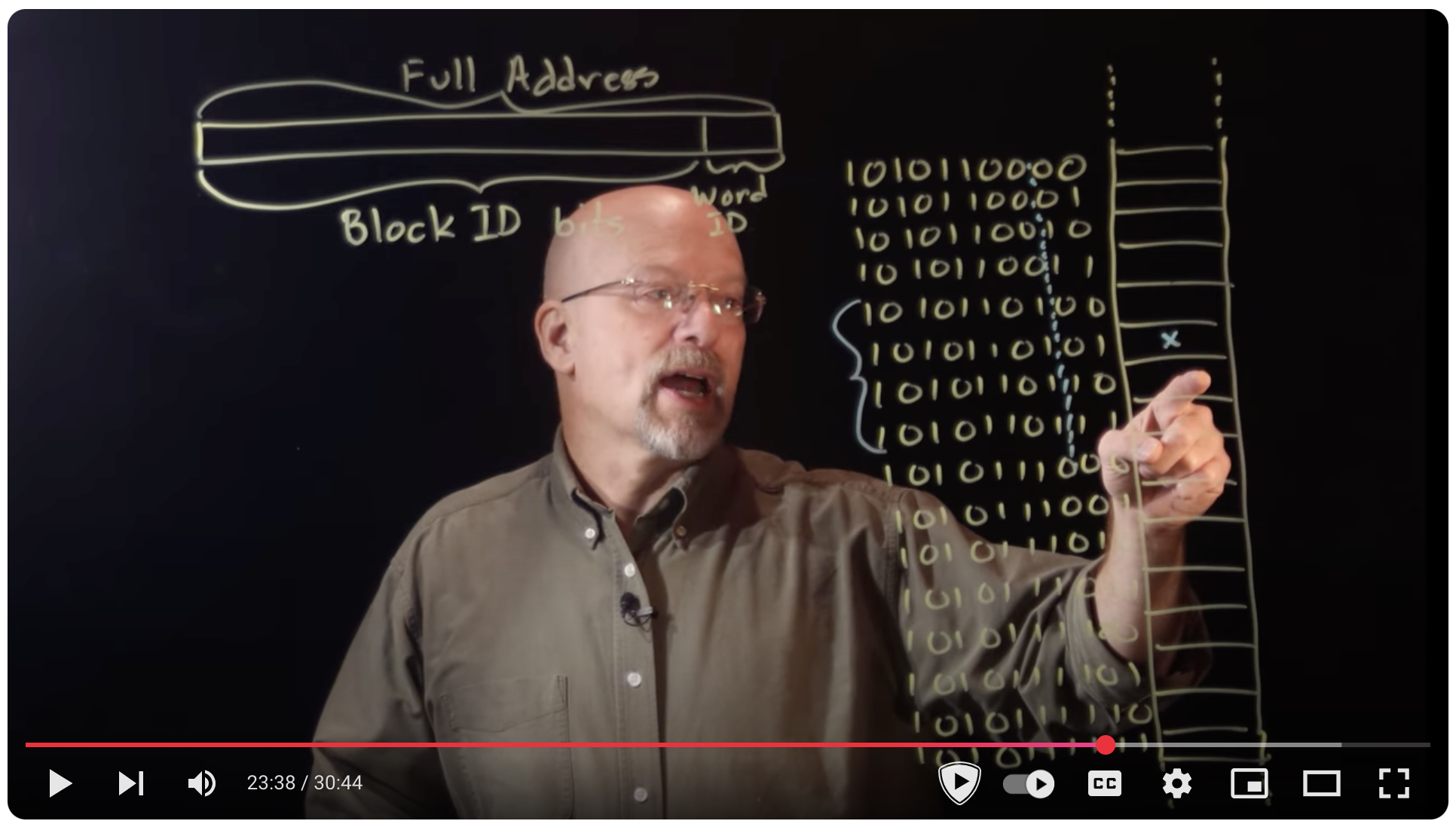

Ep 074: Fully Associative Caches and Replacement Algorithms - YouTube #

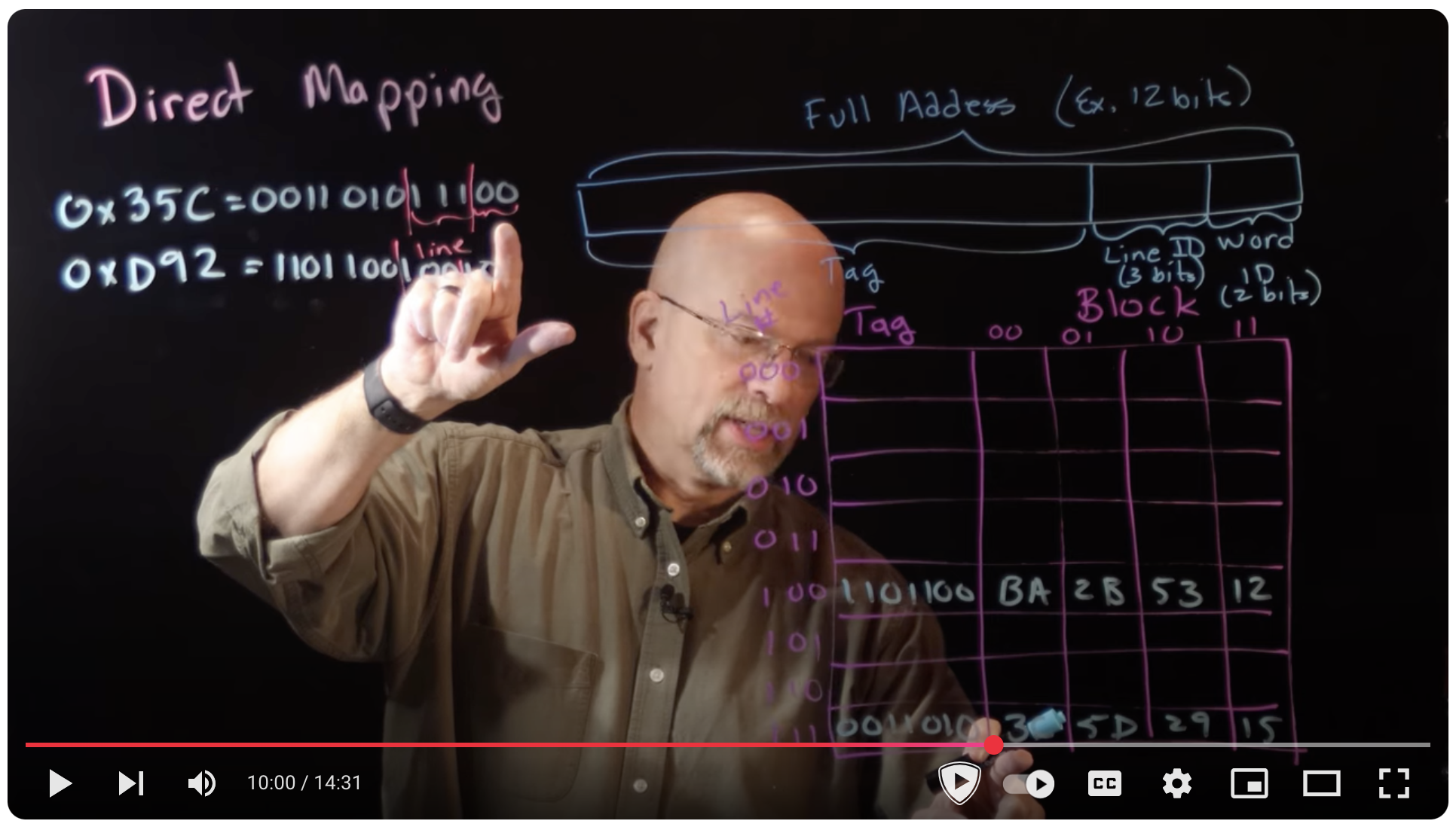

Ep 075: Direct Mapped Caches - YouTube #